Short Bio

PhD student at University of California, Irvine (UCI), advised by Stephan Mandt. Expert programming knowledge with 10+ years experience across a diverse range of languages and tasks including a long history of successful machine learning projects. Research in the intersection of Computer Science and Mathematics, currently focused on Deep Generative Models, Neural Data Compression, and the application of Machine Learning to Climate Science.

Experience

Skills

Education

Languages

Interests

-

October 2020 - December 2022

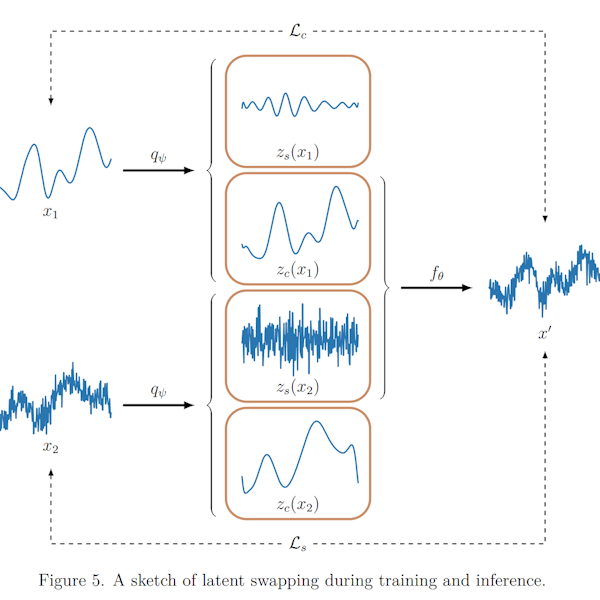

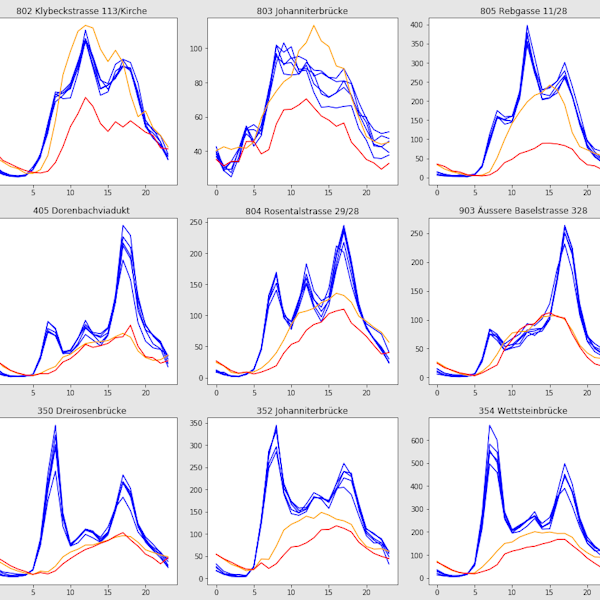

Research Assistant, TU Kaiserslautern1, Machine Learning Group

Conducted various research of use in chemical process engineering and beyond. Developed a new tensor completion framework to make predictions for sparse tabular data and style-transfer methods for time series. Ongoing collaboration, including as invited speaker at a Dagstuhl seminar. -

October 2019 - May 2020

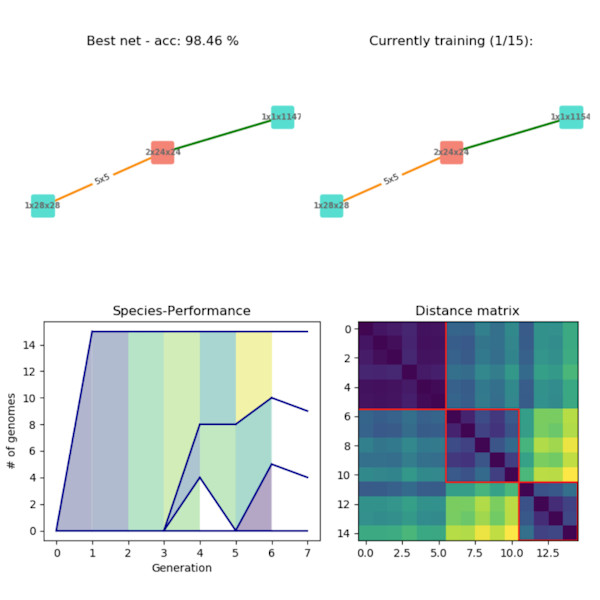

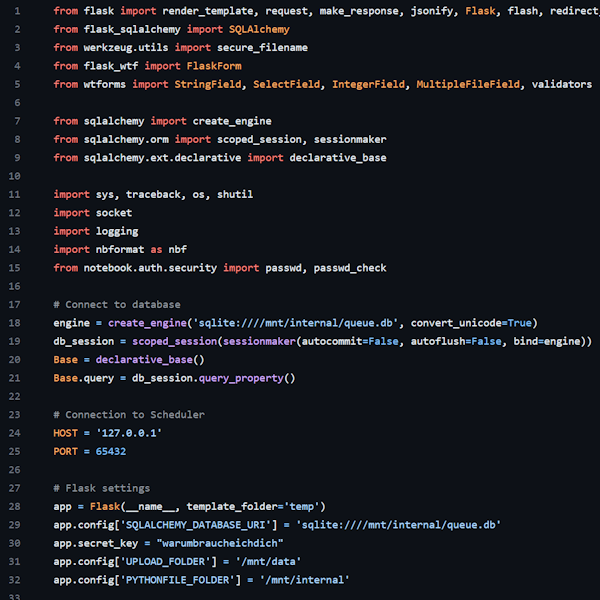

Research Assistant, German Research Center for Artificial Intelligence (DFKI)

Developed an evolutionary algorithm to optimize the topology and hyperparameters of convolutional networks. Designed a front and back end providing 50+ users intuitive access to the local GPU computation cluster. -

September 2018 - Current

Student / Teaching Assistant, TU Kaiserslautern1 / UC Irvine

Supported 1000+ students across 10+ courses in various roles as supervisor, mentor, advisor, educator, and examiner. Topics include probability theory, statistics, scientific computing, programming, machine learning, and more. - 1 Since 2023: RPTU in Kaiserslautern

- Python

Expert [8+ years] − Machine Learning Research, Data Analysis, Visualization, Hackathons, Coding Competitions, Tool and Application Development, Educator. PyTorch, TensorFlow, Sklearn, Django, etc. - R

Expert [3+ years] − Statistical Analysis, Data Analysis, Educator. - MATLAB

Expert [3+ years] − Optimization, Numerical Methods, Scientific Computing, Educator. - Java

Expert [5+ years] − App and Game Development, Distributed Computing, Algorithm Design, Educator. - C / C++

Advanced [2+ years] − Software Development, Algorithms and Data Structures. - HTML / CSS / JavaScript

Advanced [2+ years] − Full-Stack Development with Python/Django Back-End. - Others

Git, CUDA, Slurm, Docker, Kubernetes, SQL, Google/Microsoft Office Suite, VBA, Latex, UML, AI Tools, etc.

- German

Fluent - English

Fluent - French

B2 / Advanced Mid − 7+ years of instruction, student exchange with Ermont, France. - Spanish

A2 / Intermediate High − 2+ years of instruction. - Swedish

A2 / Intermediate Mid − 1+ years of instruction, semester abroad at Lund University, Sweden.

-

2023 - 2026 [est.]

Ph.D. Computer Science, UC Irvine, USA -

2020 - 2022

M.Sc. Mathematics, TU Kaiserslautern1, Germany (and Lund University, Sweden) − GPA 3.92 (Intl: 1.1) -

2018 - 2020

B.Sc. Computer Science, TU Kaiserslautern1, Germany − GPA 3.92 (Intl: 1.1) -

2017 - 2020

B.Sc. Mathematics, TU Kaiserslautern1, Germany − GPA 3.92 (Intl: 1.1) - 1 Since 2023: RPTU in Kaiserslautern

- Sports

Snowboarding, Surfing, Volleyball, Football - Culture

Travel, Cooking, Winemaking